#software-defined data infrastructure

Explore tagged Tumblr posts

Text

Enterprise-Grade Datacenter Network Solutions by Esconet

Esconet Technologies offers cutting-edge datacenter network solutions tailored for enterprises, HPC, and cloud environments. With support for high-speed Ethernet (10G to 400G), Software-Defined Networking (SDN), Infiniband, and Fibre‑Channel technologies, Esconet ensures reliable, scalable, and high-performance connectivity. Their solutions are ideal for low-latency, high-bandwidth applications and are backed by trusted OEM partnerships with Cisco, Dell, and HPE. Perfect for businesses looking to modernize and secure their datacenter infrastructure. for more details visit: Esconet Datacenter Network Page

#Datacenter Network#Enterprise Networking#High-Speed Ethernet#Software-Defined Networking (SDN)#Infiniband Network#Fibre Channel Storage#Network Infrastructure#Data Center Solutions#10G to 400G Networking#Low Latency Networks#Esconet Technologies#Datacenter Connectivity#Leaf-Spine Architecture#Network Virtualization#High Performance Computing (HPC)

0 notes

Text

Anand Jayapalan on Why Software-Defined Storage is the Key to Future-Proofing Your Data Infrastructure

Anand Jayapalan: Exploring Software-Defined Storage as the Future of Scalable and Flexible Data Solutions

In today’s rapidly evolving technological landscape, the need for scalable and flexible data solutions has reached an all-time high. As businesses generate vast amounts of data, traditional storage methods are increasingly being pushed beyond their limits. This is where Software-Defined Storage (SDS) emerges as a game-changer. For data storage solutions experts like Anand Jayapalan, SDS represents a transformative approach that decouples storage hardware from the software that manages it. This separation offers unparalleled scalability, flexibility, and efficiency—crucial for meeting the demands of modern, data-driven enterprises.

In today's rapidly evolving technological landscape, the demand for scalable and flexible data solutions has never been higher. As businesses continue to generate massive amounts of data, traditional storage methods are increasingly being stretched to their limits. This is where Software-Defined Storage (SDS) steps in as a game-changer. By decoupling storage hardware from the software that manages it, SDS offers unprecedented levels of scalability, flexibility, and efficiency that are essential for modern data-driven enterprises.

As organizations grow and their data needs expand, the ability to scale storage solutions without significant hardware investments becomes critical. SDS allows companies to do just that by leveraging commodity hardware and abstracting the storage management layer. This abstraction enables businesses to scale their storage capacity seamlessly, whether they are handling on-premises data, cloud storage, or a hybrid environment. With SDS, the days of being locked into expensive, proprietary storage solutions are numbered.

Moreover, the flexibility offered by SDS is unmatched. It provides the agility needed to adapt to changing data requirements and workloads without the need for disruptive hardware upgrades. Whether you're dealing with structured or unstructured data, SDS can dynamically allocate resources to meet the specific demands of your applications. This level of customization ensures that businesses can optimize their storage infrastructure to maximize performance while minimizing costs.

The Advantages of Implementing Software-Defined Storage in Your Organization

One of the most significant benefits of SDS is its ability to simplify storage management. Traditional storage solutions often require specialized knowledge and manual intervention to manage, scale, and troubleshoot. In contrast, SDS automates many of these processes, reducing the need for specialized skills and allowing IT teams to focus on more strategic initiatives. This automation not only improves efficiency but also reduces the risk of human error, which can lead to data loss or downtime.

Another critical advantage of SDS is its ability to enhance data availability and resilience. By distributing data across multiple nodes and providing built-in redundancy, SDS ensures that your data is always accessible, even in the event of hardware failures. This level of reliability is essential for businesses that rely on uninterrupted access to their data for daily operations.

Finally, SDS delivers substantial cost savings compared to traditional storage solutions. By utilizing off-the-shelf hardware and minimizing reliance on costly, proprietary systems, businesses can achieve equivalent levels of performance and reliability at a fraction of the cost. For IT professionals like Anand Jayapalan, SDS's ability to scale storage resources as needed is particularly advantageous, ensuring that companies pay only for the storage they use, rather than over-provisioning to anticipate future growth.

0 notes

Text

In the span of just weeks, the U.S. government has experienced what may be the most consequential security breach in its history—not through a sophisticated cyberattack or an act of foreign espionage, but through official orders by a billionaire with a poorly defined government role. And the implications for national security are profound.

First, it was reported that people associated with the newly created Department of Government Efficiency (DOGE) had accessed the U.S. Treasury computer system, giving them the ability to collect data on and potentially control the department’s roughly $5.45 trillion in annual federal payments.

Then, we learned that uncleared DOGE personnel had gained access to classified data from the U.S. Agency for International Development, possibly copying it onto their own systems. Next, the Office of Personnel Management—which holds detailed personal data on millions of federal employees, including those with security clearances—was compromised. After that, Medicaid and Medicare records were compromised.

Meanwhile, only partially redacted names of CIA employees were sent over an unclassified email account. DOGE personnel are also reported to be feeding Education Department data into artificial intelligence software, and they have also started working at the Department of Energy.

This story is moving very fast. On Feb. 8, a federal judge blocked the DOGE team from accessing the Treasury Department systems any further. But given that DOGE workers have already copied data and possibly installed and modified software, it’s unclear how this fixes anything.

In any case, breaches of other critical government systems are likely to follow unless federal employees stand firm on the protocols protecting national security.

The systems that DOGE is accessing are not esoteric pieces of our nation’s infrastructure—they are the sinews of government.

For example, the Treasury Department systems contain the technical blueprints for how the federal government moves money, while the Office of Personnel Management (OPM) network contains information on who and what organizations the government employs and contracts with.

What makes this situation unprecedented isn’t just the scope, but also the method of attack. Foreign adversaries typically spend years attempting to penetrate government systems such as these, using stealth to avoid being seen and carefully hiding any tells or tracks. The Chinese government’s 2015 breach of OPM was a significant U.S. security failure, and it illustrated how personnel data could be used to identify intelligence officers and compromise national security.

In this case, external operators with limited experience and minimal oversight are doing their work in plain sight and under massive public scrutiny: gaining the highest levels of administrative access and making changes to the United States’ most sensitive networks, potentially introducing new security vulnerabilities in the process.

But the most alarming aspect isn’t just the access being granted. It’s the systematic dismantling of security measures that would detect and prevent misuse—including standard incident response protocols, auditing, and change-tracking mechanisms—by removing the career officials in charge of those security measures and replacing them with inexperienced operators.

The Treasury’s computer systems have such an impact on national security that they were designed with the same principle that guides nuclear launch protocols: No single person should have unlimited power. Just as launching a nuclear missile requires two separate officers turning their keys simultaneously, making changes to critical financial systems traditionally requires multiple authorized personnel working in concert.

This approach, known as “separation of duties,” isn’t just bureaucratic red tape; it’s a fundamental security principle as old as banking itself. When your local bank processes a large transfer, it requires two different employees to verify the transaction. When a company issues a major financial report, separate teams must review and approve it. These aren’t just formalities—they’re essential safeguards against corruption and error.

These measures have been bypassed or ignored. It’s as if someone found a way to rob Fort Knox by simply declaring that the new official policy is to fire all the guards and allow unescorted visits to the vault.

The implications for national security are staggering. Sen. Ron Wyden said his office had learned that the attackers gained privileges that allow them to modify core programs in Treasury Department computers that verify federal payments, access encrypted keys that secure financial transactions, and alter audit logs that record system changes. Over at OPM, reports indicate that individuals associated with DOGE connected an unauthorized server into the network. They are also reportedly training AI software on all of this sensitive data.

This is much more critical than the initial unauthorized access. These new servers have unknown capabilities and configurations, and there’s no evidence that this new code has gone through any rigorous security testing protocols. The AIs being trained are certainly not secure enough for this kind of data. All are ideal targets for any adversary, foreign or domestic, also seeking access to federal data.

There’s a reason why every modification—hardware or software—to these systems goes through a complex planning process and includes sophisticated access-control mechanisms. The national security crisis is that these systems are now much more vulnerable to dangerous attacks at the same time that the legitimate system administrators trained to protect them have been locked out.

By modifying core systems, the attackers have not only compromised current operations, but have also left behind vulnerabilities that could be exploited in future attacks—giving adversaries such as Russia and China an unprecedented opportunity. These countries have long targeted these systems. And they don’t just want to gather intelligence—they also want to understand how to disrupt these systems in a crisis.

Now, the technical details of how these systems operate, their security protocols, and their vulnerabilities are now potentially exposed to unknown parties without any of the usual safeguards. Instead of having to breach heavily fortified digital walls, these parties can simply walk through doors that are being propped open—and then erase evidence of their actions.

The security implications span three critical areas.

First, system manipulation: External operators can now modify operations while also altering audit trails that would track their changes. Second, data exposure: Beyond accessing personal information and transaction records, these operators can copy entire system architectures and security configurations—in one case, the technical blueprint of the country’s federal payment infrastructure. Third, and most critically, is the issue of system control: These operators can alter core systems and authentication mechanisms while disabling the very tools designed to detect such changes. This is more than modifying operations; it is modifying the infrastructure that those operations use.

To address these vulnerabilities, three immediate steps are essential. First, unauthorized access must be revoked and proper authentication protocols restored. Next, comprehensive system monitoring and change management must be reinstated—which, given the difficulty of cleaning a compromised system, will likely require a complete system reset. Finally, thorough audits must be conducted of all system changes made during this period.

This is beyond politics—this is a matter of national security. Foreign national intelligence organizations will be quick to take advantage of both the chaos and the new insecurities to steal U.S. data and install backdoors to allow for future access.

Each day of continued unrestricted access makes the eventual recovery more difficult and increases the risk of irreversible damage to these critical systems. While the full impact may take time to assess, these steps represent the minimum necessary actions to begin restoring system integrity and security protocols.

Assuming that anyone in the government still cares.

184 notes

·

View notes

Text

Demystifying cloud computing: the future of technology.

In today's rapidly evolving digital world, cloud computing is not just a technology trend-cloud computing is the foundation of today's IT infrastructure. from streaming your favourite Netflix show to collaborating on Google Docs.

what is cloud computing?

Cloud computing is the provision of computing services including the servers, databases, storage, networking, software, analytics, and intelligence over the internet to offer faster innovation, flexible resources, and economies of scale.

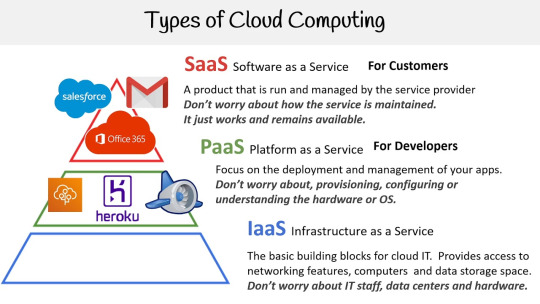

Types of cloud services.

Security in the cloud.

Security is the top priority. cloud vendors employ encryption, firewalls, multi-factor authentication, and periodic audits to secure data. Organizations must, however, set up and manage secure access.

The future of cloud computing.

The future of cloud computing is being defined by a number of ground breaking trends that are changing the way data and applications are processed. Edge computing is moving computation near data sources to decrease latency and improve real-time processing for IoT and mobile applications.

7 notes

·

View notes

Text

Windows Server 2025 Standard vs Datacenter

The Standard and Datacenter editions of Windows Server 2025 differ significantly in features, virtualization support, and pricing. Here are the mainly differences:

1. Virtualization Support

Windows Server 2025 Standard: Each license allows 2 virtual machines (VMs) plus 1 Hyper-V host.

Windows Server 2025 Datacenter: Provides unlimited virtual machines, making it ideal for large-scale virtualization environments.

2. Container Support

Windows Server 2025 Standard: Supports unlimited Windows containers but is limited to 2 Hyper-V containers.

Windows Server 2025 Datacenter: Supports unlimited Windows containers and Hyper-V containers.

3. Storage Features

Windows Server 2025 Standard:

Storage Replica is limited to 1 partnership and 1 volume (up to 2TB).

Does not support Storage Spaces Direct.

Windows Server 2025 Datacenter:

Unlimited Storage Replica partnerships.

Supports Storage Spaces Direct, enabling hyper-converged infrastructure (HCI).

4. Advanced Features

Windows Server 2025 Standard:

No support for Software-Defined Networking (SDN), Network Controller, or Shielded VMs.

No Host Guardian Hyper-V Support.

Windows Server 2025 Datacenter:

Supports SDN, Network Controller, and Shielded VMs, enhancing security and management.

Supports GPU partitioning, useful for AI/GPU-intensive workloads.

5. Pricing

Windows Server 2025 Standard:

$80.00 (includes 16 core ) at keyingo.com.

Windows Server 2025 Datacenter :

$90.00 (includes 16 core ) at keyingo.com.

Summary:

Windows Server 2025 Standard: Best for small businesses or physical server deployments with low virtualization needs.

Windows Server 2025 Datacenter: Designed for large-scale virtualization, hyper-converged infrastructure, and high-security environments, such as cloud providers and enterprise data centers.

#Windows Server 2025 Standard vs Datacenter#Windows Server 2025 Standard and Datacenter difference#Compare Windows Server 2025 Standard and Datacenter

7 notes

·

View notes

Text

Unleashing Innovation: How Intel is Shaping the Future of Technology

Introduction

In the fast-paced world of technology, few companies have managed to stay at the forefront of innovation as consistently as Intel. With a history spanning over five decades, Intel has transformed from a small semiconductor manufacturer into a global powerhouse that plays a pivotal role in shaping how we interact with technology today. From personal computing to artificial intelligence (AI) and beyond, Intel's innovations have not only defined industries but have also created new markets altogether.

youtube

In this comprehensive article, we'll delve deep into how Intel is unleashing innovation and shaping the future of technology across various domains. We’ll explore its history, key products, groundbreaking research initiatives, sustainability efforts, and much more. Buckle up as we take you on a journey through Intel’s dynamic Extra resources landscape.

Unleashing Innovation: How Intel is Shaping the Future of Technology

Intel's commitment to innovation is foundational to its mission. The company invests billions annually in research and development (R&D), ensuring that it remains ahead of market trends and consumer demands. This relentless pursuit of excellence manifests in several key areas:

The Evolution of Microprocessors A Brief History of Intel's Microprocessors

Intel's journey began with its first microprocessor, the 4004, launched in 1971. Since then, microprocessor technology has evolved dramatically. Each generation brought enhancements in processing power and energy efficiency that changed the way consumers use technology.

The Impact on Personal Computing

Microprocessors are at the heart of every personal computer (PC). They dictate performance capabilities that directly influence user experience. By continually optimizing their designs, Intel has played a crucial role in making PCs faster and more powerful.

Revolutionizing Data Centers High-Performance Computing Solutions

Data centers are essential for businesses to store and process massive amounts of information. Intel's high-performance computing solutions are designed to handle complex workloads efficiently. Their Xeon processors are specifically optimized for data center applications.

Cloud Computing and Virtualization

As cloud services become increasingly popular, Intel has developed technologies that support virtualization and cloud infrastructure. This innovation allows businesses to scale operations rapidly without compromising performance.

Artificial Intelligence: A New Frontier Intel’s AI Strategy

AI represents one of the most significant technological advancements today. Intel recognizes this potential and has positioned itself as a leader in AI hardware and software solutions. Their acquisitions have strengthened their AI portfolio significantly.

AI-Powered Devices

From smart assistants to autonomous vehicles, AI is embedded in countless devices today thanks to advancements by companies like Intel. These innovations enhance user experience by providing personalized services based on data analysis.

Internet of Things (IoT): Connecting Everything The Role of IoT in Smart Cities

2 notes

·

View notes

Text

Derad Network: The Crypto Project That's Taking Aviation to New Heights https://www.derad.net/

Hey Tumblr fam, let's talk about something wild: a blockchain project that's not just about making money, but about making the skies safer. Meet Derad Network, a Decentralized Physical Infrastructure Network (DePIN) that's using crypto magic to revolutionize how we track planes. If you're into tech, aviation, or just love seeing Web3 do cool stuff in the real world, this one's for you. Buckle up-here's the scoop.

What's Derad Network?

Picture this: every plane in the sky is constantly beaming out its location, speed, and altitude via something called ADS-B (Automatic Dependent Surveillance-Broadcast). It's like GPS for aircraft, way sharper than old-school radar. But here's the catch-those signals need ground stations to catch them, and there aren't enough out there, especially in remote spots like mountains or over the ocean. That's where Derad Network swoops in.

Instead of waiting for some big corporation or government to build more stations, Derad says,"Why not let anyone do it?" They've built a decentralized network where regular people-you, me, your neighbor with a Raspberry Pi-can host ADS-B stations or process flight data and get paid in DRD tokens. It's a community-powered vibe that fills the gaps in flight tracking, making flying safer and giving us all a piece of the action. Oh, and it's all locked down with blockchain, so the data's legit and tamper-proof. Cool, right?

How It Actually Works

Derad's setup is super approachable, which is why I'm obsessed. There are two ways to jump in:

Ground Stations: Got a corner of your room and a decent Wi-Fi signal? You can set up an ADS-B ground station with some affordable gear-like a software-defined radio (SDR) antenna and a little computer setup. These stations grab signals from planes flying overhead, collecting stuff like "this Boeing 737 is at 30,000 feet going 500 mph." You send that data to the network and boom, DRD tokens hit your wallet. It's like mining crypto, but instead of solving math puzzles, you're helping pilots stay safe.

Data Nodes: Not into hardware? You can still play. Run a data processing node on your laptop or whatever spare device you've got lying around. These nodes take the raw info from ground stations, clean it up, and make it useful for whoever needs it-like airlines or air traffic nerds. You get DRD for that too. It's a chill way to join without needing to turn your place into a tech lab.

All this data flows into a blockchain (Layer 1, for the tech heads), keeping it secure and transparent. Derad's even eyeing permanent storage with Arweave, so nothing gets lost. Then, companies or regulators can buy that data with DRD through a marketplace. It's a whole ecosystem where we're the backbone, and I'm here for it.

DRD Tokens: Crypto with a Purpose

The DRD token is the star of the show. You earn it by hosting a station or running a node, and businesses use it to grab the flight data they need. It's not just some random coin to trade—it's got real juice because it's tied to a legit use case.The more people join, the more data flows, and the more DRD gets moving. It's crypto with a mission, and that's the kind of energy I vibe with.

Why This Matters (Especially forAviation Geeks)

Okay, let's get real-flying's already pretty safe, but it's not perfect. Radar's great, but it's blind in tons of places, like over the Pacific or in the middle of nowhere. ADS-B fixes that, but only if there are enough stations to catch the signals.Derad's like, "Let's crowdsource this." Here's why it's a game-changer:

Safer Skies: More stations = better tracking. That means fewer chances of planes bumping into each other (yikes) and faster help if something goes wrong.

Cheaper Than Big Tech: Building centralized stations costs a fortune. Derad's DIY approach saves cash and spreads the love to smaller players like regional airlines or even drone companies.

Regulators Love It: Blockchain makes everything transparent. Airspace rules getting broken? It's logged forever, no shady cover-ups.

Regulators Love It: Blockchain makes everything transparent. Airspace rules getting broken? It's logged forever, no shady cover-ups.

Logistics Glow-Up: Airlines can plan better routes, save fuel, and track packages like champs, all thanks to this decentralized data stash.

And get this-they're not stopping at planes.Derad's teasing plans to tackle maritime tracking with AlS (think ships instead of wings). This could be huge.

Where It's Headed

Derad's still in its early ascent, but the flight plan's stacked. They're aiming for 10,000 ground stations worldwide (imagine the coverage!), launching cheap antenna kits to get more people in, and dropping "Ground Station as a Service" (GSS) so even newbies can join. The Mainnet XL launch is coming to crank up the scale, and they're teaming up with SDR makers and Layer 2 blockchains to keep it smooth and speedy.

The wildest part? They want a full-on marketplace for radio signals-not just planes, but all kinds of real-time data. It's ambitious as hell, and I'm rooting for it.

Why Tumblr Should Stan Derad

This isn't just for crypto bros or plane spotters-it's for anyone who loves seeing tech solve real problems. Derad's got that DIY spirit Tumblr thrives on: take something niche (flight data), flip it into a community project, and make it matter.The DRD token's got legs because it's useful, not just a gamble. It's like catching a band before they blow up.

The Rough Patches

No flight's turbulence-free. Aviation's got rules out the wazoo, and regulators might side-eye a decentralized setup. Scaling to thousands of stations needs hardware and hype, which isn't instant. Other DePIN projects or big aviation players could try to muscle in too. But Derad's got a unique angle-community power and a solid mission—so I'm betting it'll hold its own.

Final Boarding Call

Derad Network's the kind of project that gets me hyped. It's crypto with soul, turning us into the heroes who keep planes safe while sticking it to centralized gatekeepers. Whether you're a tech geek, a crypto stan, or just someone who loves a good underdog story, this is worth watching.

Derad's taking off, and I'm strapped in for the ride.What about you?

3 notes

·

View notes

Text

Launch Your Own Crypto Platform with Notcoin Clone Script | Fast & Secure Solution

To launch your own cryptocurrency platform using a Notcoin clone script, you can follow a structured approach that leverages existing clone scripts tailored for various cryptocurrency exchanges.

Here’s a detailed guide on how to proceed:

Understanding Clone Scripts

A clone script is a pre-built software solution that replicates the functionalities of established cryptocurrency exchanges. These scripts can be customized to suit your specific business needs and allow for rapid deployment, saving both time and resources.

Types of Clone Scripts

Centralized Exchange Scripts: These replicate platforms like Binance or Coinbase, offering features such as order books and user management.

Decentralized Exchange Scripts: These are designed for platforms like Uniswap or PancakeSwap, enabling peer-to-peer trading without a central authority.

Peer-to-Peer (P2P) Exchange Scripts: These allow users to trade directly with each other, similar to LocalBitcoins or Paxful.

Steps to Launch Your Crypto Platform

Step 1: Define Your Business Strategy

Market Research: Identify your target audience and analyze competitors.

Unique Value Proposition: Determine what sets your platform apart from others.

Step 2: Choose the Right Clone Script

Evaluate Options: Research various clone scripts available in the market, such as those for Binance, Coinbase, or P2P exchanges. Customization: Ensure the script is customizable to meet your specific requirements, including branding and features.

Step 3: Development and Deployment

Technical Setup: Collaborate with developers to set up the necessary infrastructure, including blockchain integration and wallet services.

Security Features: Implement robust security measures, such as two-factor authentication and encryption, to protect user data and transactions.

Step 4: Compliance and Regulations

KYC/AML Integration: Ensure your platform complies with local regulations by integrating Know Your Customer (KYC) and Anti-Money Laundering (AML) protocols.

Step 5: Testing and Launch

Quality Assurance: Conduct thorough testing to identify and fix any bugs or vulnerabilities.

Launch: Once testing is complete, launch your platform and start marketing it to attract users.

Advantages of Using a Notcoin Clone Script

Cost-Effective: Using a pre-built script is generally more affordable than developing a platform from scratch.

Faster Time to Market: Notcoin Clone scripts are ready to deploy, significantly reducing development time.

Customization Options: Most scripts allow for extensive customization, enabling you to tailor the platform to your needs.

Conclusion

Launching your own cryptocurrency platform with a Notcoin clone script is a viable option that can lead to a successful venture in the growing crypto market. By following the outlined steps and leveraging the advantages of Notcoin clone scripts, you can create a robust and secure trading platform that meets user demands and regulatory requirements.

For further assistance, consider reaching out to specialized development companies that offer Notcoin clone script and can guide you through the setup process

#cryptotrading#notcoin#notcoinclonescript#cryptocurrencies#crypto exchange#blockchain#crypto traders#crypto investors#cryptonews#web3 development

3 notes

·

View notes

Text

Complete Terraform IAC Development: Your Essential Guide to Infrastructure as Code

If you're ready to take control of your cloud infrastructure, it's time to dive into Complete Terraform IAC Development. With Terraform, you can simplify, automate, and scale infrastructure setups like never before. Whether you’re new to Infrastructure as Code (IAC) or looking to deepen your skills, mastering Terraform will open up a world of opportunities in cloud computing and DevOps.

Why Terraform for Infrastructure as Code?

Before we get into Complete Terraform IAC Development, let’s explore why Terraform is the go-to choice. HashiCorp’s Terraform has quickly become a top tool for managing cloud infrastructure because it’s open-source, supports multiple cloud providers (AWS, Google Cloud, Azure, and more), and uses a declarative language (HCL) that’s easy to learn.

Key Benefits of Learning Terraform

In today's fast-paced tech landscape, there’s a high demand for professionals who understand IAC and can deploy efficient, scalable cloud environments. Here’s how Terraform can benefit you and why the Complete Terraform IAC Development approach is invaluable:

Cross-Platform Compatibility: Terraform supports multiple cloud providers, which means you can use the same configuration files across different clouds.

Scalability and Efficiency: By using IAC, you automate infrastructure, reducing errors, saving time, and allowing for scalability.

Modular and Reusable Code: With Terraform, you can build modular templates, reusing code blocks for various projects or environments.

These features make Terraform an attractive skill for anyone working in DevOps, cloud engineering, or software development.

Getting Started with Complete Terraform IAC Development

The beauty of Complete Terraform IAC Development is that it caters to both beginners and intermediate users. Here’s a roadmap to kickstart your learning:

Set Up the Environment: Install Terraform and configure it for your cloud provider. This step is simple and provides a solid foundation.

Understand HCL (HashiCorp Configuration Language): Terraform’s configuration language is straightforward but powerful. Knowing the syntax is essential for writing effective scripts.

Define Infrastructure as Code: Begin by defining your infrastructure in simple blocks. You’ll learn to declare resources, manage providers, and understand how to structure your files.

Use Modules: Modules are pre-written configurations you can use to create reusable code blocks, making it easier to manage and scale complex infrastructures.

Apply Best Practices: Understanding how to structure your code for readability, reliability, and reusability will save you headaches as projects grow.

Core Components in Complete Terraform IAC Development

When working with Terraform, you’ll interact with several core components. Here’s a breakdown:

Providers: These are plugins that allow Terraform to manage infrastructure on your chosen cloud platform (AWS, Azure, etc.).

Resources: The building blocks of your infrastructure, resources represent things like instances, databases, and storage.

Variables and Outputs: Variables let you define dynamic values, and outputs allow you to retrieve data after deployment.

State Files: Terraform uses a state file to store information about your infrastructure. This file is essential for tracking changes and ensuring Terraform manages the infrastructure accurately.

Mastering these components will solidify your Terraform foundation, giving you the confidence to build and scale projects efficiently.

Best Practices for Complete Terraform IAC Development

In the world of Infrastructure as Code, following best practices is essential. Here are some tips to keep in mind:

Organize Code with Modules: Organizing code with modules promotes reusability and makes complex structures easier to manage.

Use a Remote Backend: Storing your Terraform state in a remote backend, like Amazon S3 or Azure Storage, ensures that your team can access the latest state.

Implement Version Control: Version control systems like Git are vital. They help you track changes, avoid conflicts, and ensure smooth rollbacks.

Plan Before Applying: Terraform’s “plan” command helps you preview changes before deploying, reducing the chances of accidental alterations.

By following these practices, you’re ensuring your IAC deployments are both robust and scalable.

Real-World Applications of Terraform IAC

Imagine you’re managing a complex multi-cloud environment. Using Complete Terraform IAC Development, you could easily deploy similar infrastructures across AWS, Azure, and Google Cloud, all with a few lines of code.

Use Case 1: Multi-Region Deployments

Suppose you need a web application deployed across multiple regions. Using Terraform, you can create templates that deploy the application consistently across different regions, ensuring high availability and redundancy.

Use Case 2: Scaling Web Applications

Let’s say your company’s website traffic spikes during a promotion. Terraform allows you to define scaling policies that automatically adjust server capacities, ensuring that your site remains responsive.

Advanced Topics in Complete Terraform IAC Development

Once you’re comfortable with the basics, Complete Terraform IAC Development offers advanced techniques to enhance your skillset:

Terraform Workspaces: Workspaces allow you to manage multiple environments (e.g., development, testing, production) within a single configuration.

Dynamic Blocks and Conditionals: Use dynamic blocks and conditionals to make your code more adaptable, allowing you to define configurations that change based on the environment or input variables.

Integration with CI/CD Pipelines: Integrate Terraform with CI/CD tools like Jenkins or GitLab CI to automate deployments. This approach ensures consistent infrastructure management as your application evolves.

Tools and Resources to Support Your Terraform Journey

Here are some popular tools to streamline your learning:

Terraform CLI: The primary tool for creating and managing your infrastructure.

Terragrunt: An additional layer for working with Terraform, Terragrunt simplifies managing complex Terraform environments.

HashiCorp Cloud: Terraform Cloud offers a managed solution for executing and collaborating on Terraform workflows.

There are countless resources available online, from Terraform documentation to forums, blogs, and courses. HashiCorp offers a free resource hub, and platforms like Udemy provide comprehensive courses to guide you through Complete Terraform IAC Development.

Start Your Journey with Complete Terraform IAC Development

If you’re aiming to build a career in cloud infrastructure or simply want to enhance your DevOps toolkit, Complete Terraform IAC Development is a skill worth mastering. From managing complex multi-cloud infrastructures to automating repetitive tasks, Terraform provides a powerful framework to achieve your goals.

Start with the basics, gradually explore advanced features, and remember: practice is key. The world of cloud computing is evolving rapidly, and those who know how to leverage Infrastructure as Code will always have an edge. With Terraform, you’re not just coding infrastructure; you’re building a foundation for the future. So, take the first step into Complete Terraform IAC Development—it’s your path to becoming a versatile, skilled cloud professional

2 notes

·

View notes

Text

mjPRO | The Ultimate Procurement System for Reducing Costs and Enhancing Efficiency

In today’s fast-paced digital world, businesses are looking for tools that can streamline their processes, enhance efficiency, and minimize costs. Procurement, a critical function for every organization, is no exception. Choosing the right procurement software can make all the difference in managing supply chains effectively, improving governance, and cutting procurement costs. Among the numerous procurement software companies, mjPRO stands out as a robust, AI-powered solution that offers scalability, cost-efficiency, and enhanced profitability.

In this blog, we’ll explore the best features of mjPRO, why it’s considered the best procurement software, and how it supports businesses in managing procurement processes seamlessly.

What Is Procurement Software?

Before we dive into mjPRO, let’s first define procurement software. In simple terms, procurement software is a digital tool that automates and manages the entire procurement process—from requisitioning to payment. It helps organizations track their purchases, manage suppliers, and ensure compliance, reducing manual intervention and boosting overall efficiency. Today, eProcurement software solutions are often cloud-based, providing businesses with flexibility, scalability, and advanced data-driven insights.

Why mjPRO Is the Best Procurement Software

mjPRO is not just another procurement software; it’s a comprehensive eProcurement software platform that digitizes the entire procurement process from planning to payment. Whether you’re managing simple purchases or complex projects, mjPRO provides a unified solution that reduces procurement costs, enhances supplier management, and ensures near 100% delivery compliance. Here's why mjPRO is considered one of the top procurement software solutions in the market:

1. Cloud-Based, Pay-Per-Use Model

One of the most attractive features of mjPRO is its pay-per-use, cloud-based model. Unlike traditional procurement systems that require significant upfront investment and infrastructure, mjPRO offers a flexible, cloud-based solution that allows for scalability based on your business needs. This ensures a faster ROI and eliminates the need for costly hardware or software upgrades.

2. Intelligent Platform with AI-Powered Automation

At its core, mjPRO is an intelligent procurement platform powered by AI. It leverages advanced technologies like AI and NLP-based analytics to offer real-time insights, such as category and supplier recommendations. By learning from your procurement patterns and suggesting suppliers based on past purchasing behavior, mjPRO takes the guesswork out of sourcing, making it the best procurement software for both small and large enterprises.

3. Strong Supplier Base and Smart Decision-Making Tools

With mjPRO, you’ll have access to an ever-growing supplier base. The platform continuously adds new suppliers to the ecosystem, giving businesses more options and competitive pricing. What’s more, mjPRO integrates AI-based decision-making tools to provide real-time supplier recommendations, enabling procurement teams to make informed decisions quickly.

4. End-to-End Procurement Chain Digitization

mjPRO digitizes the entire procurement chain, from planning to payment, making it one of the top procurement software platforms available today. Let’s break down how mjPRO handles each step of the procurement process:

a. Plan

The planning phase is critical to ensuring the procurement process runs smoothly. mjPRO helps streamline this process by allowing users to categorize items up to four levels, aggregate or split requirements, and manage budgets more effectively. With features like eBriefcase and category-specific insights, businesses can easily plan for both routine and complex procurements.

b. Source

Supplier management is one of the most challenging aspects of procurement. mjPRO excels in this area by offering a comprehensive supplier management module. The system helps businesses survey, rate, and profile suppliers before they are approved. mjPRO also automates RFQs (Request for Quotations) and integrates RPA-based bidding, which enhances supplier negotiations and ensures competitive pricing.

c. Procure

Once suppliers have been approved, mjPRO facilitates the creation and management of purchase orders (POs). The platform automates the post-PO process by handling tasks such as PO acceptance, ASN (Advanced Shipping Notice) generation, PI (Proforma Invoice) approval, and eCatalogues management. This automation reduces the risk of human error and accelerates procurement times by as much as 40%.

d. Pay

Finally, mjPRO ensures that payment processing is seamless. The platform performs thorough three-point checks before invoice approval and integrates with payment gateways to automate payments. The result is a faster, more efficient payment process that ensures suppliers are paid on time, minimizing disruptions in the supply chain.

How mjPRO Benefits Your Business

Now that we’ve covered the key features of mjPRO, let’s take a look at how this procurement software can benefit your business:

1. Reduces Procurement Costs by 7%

Procurement costs can take up a significant portion of a company’s budget. By using mjPRO, businesses can reduce these costs by up to 7%. This is achieved through better supplier negotiations, automated procurement processes, and more efficient resource management.

2. Makes Procurement 40% Faster

One of the most compelling advantages of mjPRO is its ability to speed up the procurement process. The platform reduces manual tasks and automates the creation and management of POs, invoices, and supplier communications, making procurement up to 40% faster. This is a game-changer for businesses that operate in fast-paced environments and need to meet tight deadlines.

3. Limits Supply Risk with 100% Delivery Compliance

Supply chain disruptions can have serious consequences for businesses. mjPRO mitigates these risks by ensuring nearly 100% delivery compliance. With advanced supplier profiling, automated RFQs, and integrated payment gateways, mjPRO ensures that your supply chain operates smoothly and without delays.

4. Strengthens Governance and Ensures Zero Frauds

In the digital age, governance and compliance are more important than ever. mjPRO strengthens governance across the procurement process by offering real-time insights into supplier performance and ensuring that all procurement activities are auditable. This procurement software also helps prevent fraud by enforcing strict supplier approval and payment processes.

Unlock the Full Potential of eProcurement with mjPRO

If you're searching for a procurement software company that offers a comprehensive, scalable solution for your business, look no further than mjPRO. This eProcurement software not only digitizes the entire procurement process but also enhances it with AI-powered tools, robust supplier management, and seamless payment integration.

With mjPRO, businesses can gain full control over their procurement activities while reducing costs, accelerating procurement timelines, and ensuring compliance across the board.

Key Features of mjPRO:

Pay-Per-Use Cloud-Based Solution: Achieve faster ROI without heavy investment.

AI-Powered Automation: Make data-driven decisions with category and supplier recommendations.

Strong Supplier Base: Access to a continuously growing supplier network.

Comprehensive Digitization: Manage everything from planning to payment on one platform.

Faster Procurement: Reduce procurement times by 40% through automation.

Enhanced Governance: Ensure zero frauds and full compliance with real-time monitoring.

If you're ready to take your procurement processes to the next level, mjPRO is the best procurement software for businesses of all sizes. Contact us today to learn more and unlock the full potential of eProcurement software for your organization!

#Procurement software#Best procurement software#Top Procurement software#eProcurement software#Procurement software company#Procurement software companies#Procurement system

2 notes

·

View notes

Text

Windows Server 2016: Revolutionizing Enterprise Computing

In the ever-evolving landscape of enterprise computing, Windows Server 2016 emerges as a beacon of innovation and efficiency, heralding a new era of productivity and scalability for businesses worldwide. Released by Microsoft in September 2016, Windows Server 2016 represents a significant leap forward in terms of security, performance, and versatility, empowering organizations to embrace the challenges of the digital age with confidence. In this in-depth exploration, we delve into the transformative capabilities of Windows Server 2016 and its profound impact on the fabric of enterprise IT.

Introduction to Windows Server 2016

Windows Server 2016 stands as the cornerstone of Microsoft's server operating systems, offering a comprehensive suite of features and functionalities tailored to meet the diverse needs of modern businesses. From enhanced security measures to advanced virtualization capabilities, Windows Server 2016 is designed to provide organizations with the tools they need to thrive in today's dynamic business environment.

Key Features of Windows Server 2016

Enhanced Security: Security is paramount in Windows Server 2016, with features such as Credential Guard, Device Guard, and Just Enough Administration (JEA) providing robust protection against cyber threats. Shielded Virtual Machines (VMs) further bolster security by encrypting VMs to prevent unauthorized access.

Software-Defined Storage: Windows Server 2016 introduces Storage Spaces Direct, a revolutionary software-defined storage solution that enables organizations to create highly available and scalable storage pools using commodity hardware. With Storage Spaces Direct, businesses can achieve greater flexibility and efficiency in managing their storage infrastructure.

Improved Hyper-V: Hyper-V in Windows Server 2016 undergoes significant enhancements, including support for nested virtualization, Shielded VMs, and rolling upgrades. These features enable organizations to optimize resource utilization, improve scalability, and enhance security in virtualized environments.

Nano Server: Nano Server represents a lightweight and minimalistic installation option in Windows Server 2016, designed for cloud-native and containerized workloads. With reduced footprint and overhead, Nano Server enables organizations to achieve greater agility and efficiency in deploying modern applications.

Container Support: Windows Server 2016 embraces the trend of containerization with native support for Docker and Windows containers. By enabling organizations to build, deploy, and manage containerized applications seamlessly, Windows Server 2016 empowers developers to innovate faster and IT operations teams to achieve greater flexibility and scalability.

Benefits of Windows Server 2016

Windows Server 2016 offers a myriad of benefits that position it as the platform of choice for modern enterprise computing:

Enhanced Security: With advanced security features like Credential Guard and Shielded VMs, Windows Server 2016 helps organizations protect their data and infrastructure from a wide range of cyber threats, ensuring peace of mind and regulatory compliance.

Improved Performance: Windows Server 2016 delivers enhanced performance and scalability, enabling organizations to handle the demands of modern workloads with ease and efficiency.

Flexibility and Agility: With support for Nano Server and containers, Windows Server 2016 provides organizations with unparalleled flexibility and agility in deploying and managing their IT infrastructure, facilitating rapid innovation and adaptation to changing business needs.

Cost Savings: By leveraging features such as Storage Spaces Direct and Hyper-V, organizations can achieve significant cost savings through improved resource utilization, reduced hardware requirements, and streamlined management.

Future-Proofing: Windows Server 2016 is designed to support emerging technologies and trends, ensuring that organizations can stay ahead of the curve and adapt to new challenges and opportunities in the digital landscape.

Conclusion: Embracing the Future with Windows Server 2016

In conclusion, Windows Server 2016 stands as a testament to Microsoft's commitment to innovation and excellence in enterprise computing. With its advanced security, enhanced performance, and unparalleled flexibility, Windows Server 2016 empowers organizations to unlock new levels of efficiency, productivity, and resilience. Whether deployed on-premises, in the cloud, or in hybrid environments, Windows Server 2016 serves as the foundation for digital transformation, enabling organizations to embrace the future with confidence and achieve their full potential in the ever-evolving world of enterprise IT.

Website: https://microsoftlicense.com

5 notes

·

View notes

Text

Exploring Innovative Technology and Future-proofing in High-End Model Cars

In the rapidly evolving automotive industry, high-end model cars are at the forefront of innovation, integrating cutting-edge technology and future-proofing features to meet the demands of discerning consumers. Let's delve into the innovative technology and future-proofing strategies that define high-end model cars.

Advanced Safety and Driver-Assist Systems

Automated Driving Capabilities: High-end model cars are equipped with advanced automated driving features, such as adaptive cruise control, lane-keeping assistance, and automated parking, paving the way for a future of self-driving capabilities.

Collision Avoidance Technology: Utilizing radar, lidar, and camera systems, these cars incorporate collision avoidance technology to enhance safety, mitigate accidents, and protect both occupants and pedestrians.

Advanced Driver Monitoring: Cutting-edge driver monitoring systems use AI and advanced sensors to detect driver drowsiness, distraction, and provide alerts, ensuring a safer driving experience.

Sustainable Power-trains and Electrification

Electric and Hybrid Technology: High-end model cars embrace electrification with sophisticated electric and hybrid powertrains, offering enhanced efficiency, lower emissions, and a glimpse into the future of sustainable mobility.

Fast-Charging Infrastructure: These cars are designed to support fast-charging capabilities, reducing charging times and enhancing the practicality of electric driving.

Regenerative Braking: Incorporating regenerative braking technology, high-end models capture and store energy during braking, maximizing efficiency and range.

Connectivity and Infotainment Evolution

5G Connectivity: Future-proofing high-end model cars involves integrating 5G connectivity, enabling faster data transfer, low-latency communication, and unlocking new possibilities for in-car entertainment and communication.

Enhanced Infotainment Interfaces: These cars feature intuitive, AI-powered infotainment interfaces that learn from user behavior, anticipate preferences, and seamlessly integrate with personal devices and services.

Over-the-Air Updates: Future-proofing includes over-the-air software updates, ensuring that the car's systems and features remain up to date with the latest enhancements and security patches.

Environmental Sustainability and Luxury

Sustainable Materials: High-end model cars are incorporating sustainable materials in their interiors, showcasing a commitment to environmental responsibility without compromising luxury and comfort.

Energy-Efficient Climate Control: Utilizing advanced climate control systems, these cars optimize energy usage to maintain a comfortable interior environment while minimizing energy consumption.

Adaptive Lighting Technology: Future-proofing extends to adaptive lighting systems that improve visibility, enhance safety, and reduce energy consumption through advanced LED and laser technologies.

High-end model cars are at the vanguard of innovation, embracing advanced technology and future-proofing strategies to deliver unparalleled driving experiences.

From automated driving capabilities to sustainable power-trains, enhanced connectivity, and a commitment to environmental sustainability, these cars are shaping the future of automotive luxury and performance, setting new standards for innovation and excellence in the automotive industry.

In the ever-evolving landscape of car shipping, staying ahead with innovative technology is crucial for a safe and efficient experience. Explore the latest advancements for a safe direct car shipping reviews and how they can future-proof your transportation needs.

youtube

4 notes

·

View notes

Text

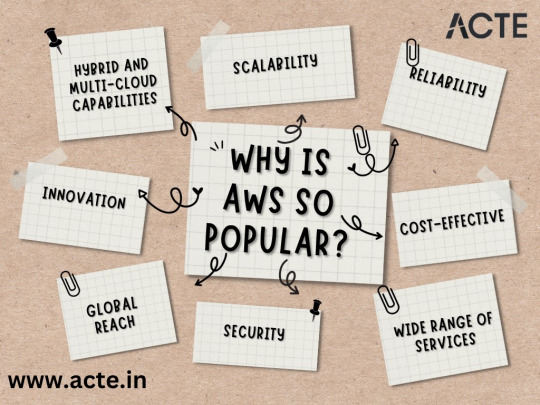

The AWS Advantage: Exploring the Key Reasons Behind Its Dominance

In the ever-evolving landscape of cloud computing and web services, Amazon Web Services (AWS) has emerged as a true juggernaut. Its dominance transcends industries, making it the preferred choice for businesses, startups, and individuals alike. AWS's meteoric rise can be attributed to a potent combination of factors that have revolutionized the way organizations approach IT infrastructure and software development. In this comprehensive exploration, we will delve into the multifaceted reasons behind AWS's widespread popularity. We'll dissect how scalability, reliability, cost-effectiveness, a vast service portfolio, unwavering security, global reach, relentless innovation, and hybrid/multi-cloud capabilities have all played crucial roles in cementing AWS's position at the forefront of cloud computing.

The AWS Revolution: Unpacking the Reasons Behind Its Popularity:

1. Scalability: Fueling Growth and Flexibility AWS's unparalleled scalability is one of its defining features. This capability allows businesses to start with minimal resources and effortlessly scale their infrastructure up or down based on demand. Whether you're a startup experiencing rapid growth or an enterprise dealing with fluctuating workloads, AWS offers the flexibility to align resources with your evolving requirements. This "pay-as-you-go" model ensures that you only pay for what you use, eliminating the need for costly upfront investments in hardware and infrastructure.

2. Reliability: The Backbone of Mission-Critical Operations AWS's reputation for reliability is second to none. With a highly resilient infrastructure and a robust global network, AWS delivers on its promise of high availability. It offers a Service Level Agreement (SLA) that guarantees impressive uptime percentages, making it an ideal choice for mission-critical applications. Businesses can rely on AWS to keep their services up and running, even in the face of unexpected challenges.

3. Cost-Effectiveness: A Game-Changer for Businesses of All Sizes The cost-effectiveness of AWS is a game-changer. Its pay-as-you-go pricing model enables organizations to avoid hefty upfront capital expenditures. Startups can launch their ventures with minimal financial barriers, while enterprises can optimize costs by only paying for the resources they consume. This cost flexibility is a driving force behind AWS's widespread adoption across diverse industries.

4. Wide Range of Services: A One-Stop Cloud Ecosystem AWS offers a vast ecosystem of services that cover virtually every aspect of cloud computing. From computing and storage to databases, machine learning, analytics, and more, AWS provides a comprehensive suite of tools and resources. This breadth of services allows businesses to address various IT needs within a single platform, simplifying management and reducing the complexity of multi-cloud environments.

5. Security: Fortifying the Cloud Environment Security is a paramount concern in the digital age, and AWS takes it seriously. The platform offers a myriad of security tools and features designed to protect data and applications. AWS complies with various industry standards and certifications, providing a secure environment for sensitive workloads. This commitment to security has earned AWS the trust of organizations handling critical data and applications.

6. Global Reach: Bringing Services Closer to Users With data centers strategically located in multiple regions worldwide, AWS enables businesses to deploy applications and services closer to their end-users. This reduces latency and enhances the overall user experience, a crucial advantage in today's global marketplace. AWS's global presence ensures that your services can reach users wherever they are, ensuring optimal performance and responsiveness.

7. Innovation: Staying Ahead of the Curve AWS's culture of innovation keeps businesses at the forefront of technology. The platform continually introduces new services and features, allowing organizations to leverage the latest advancements without the need for significant internal development efforts. This innovation-driven approach empowers businesses to remain agile and competitive in a rapidly evolving digital landscape.

8. Hybrid and Multi-Cloud Capabilities: Embracing Diverse IT Environments AWS recognizes that not all organizations operate solely in the cloud. Many have on-premises infrastructure and may choose to adopt a multi-cloud strategy. AWS provides solutions for hybrid and multi-cloud environments, enabling businesses to seamlessly integrate their existing infrastructure with the cloud or even leverage multiple cloud providers. This flexibility ensures that AWS can adapt to the unique requirements of each organization.

Amazon Web Services has risen to unprecedented popularity by offering unmatched scalability, reliability, cost-effectiveness, and a comprehensive service portfolio. Its commitment to security, global reach, relentless innovation, and support for hybrid/multi-cloud environments make it the preferred choice for businesses worldwide. ACTE Technologies plays a crucial role in ensuring that professionals can harness the full potential of AWS through its comprehensive training programs. As AWS continues to shape the future of cloud computing, those equipped with the knowledge and skills provided by ACTE Technologies are poised to excel in this ever-evolving landscape.

7 notes

·

View notes

Text

VPS Hosting Unleashed: Unraveling the Secrets Behind the Best in the Business

Introduction

In the ever-evolving landscape of online presence, VPS hosting stands as a pivotal factor in ensuring a seamless and powerful digital experience. Businesses, both small and large, are constantly seeking the best VPS Hosting Services to propel their online ventures. In this article, we delve into the intricacies of VPS hosting, uncovering the secrets behind the best in the business.

What is VPS Hosting?

VPS, or Virtual Private Server, hosting is a revolutionary approach that bridges the gap between shared hosting and dedicated servers. It provides users with a dedicated portion of a physical server, offering enhanced performance, control, and flexibility.

Key Features of Top-notch VPS Hosting

Scalability: The best VPS hosting solutions offer seamless scalability, allowing businesses to adapt to changing needs without compromising performance.

Resource Allocation: With dedicated resources such as RAM, CPU, and storage, top VPS hosting ensures optimal performance even during traffic spikes.

Customization: The ability to customize server configurations empowers users to tailor the hosting environment to their specific requirements.

Unraveling the Secrets

The crux of superior VPS hosting lies in the utilization of cutting-edge technology. Industry leaders invest in state-of-the-art infrastructure, incorporating the latest advancements in server hardware and virtualization technologies. This ensures not only speed and reliability but also positions them as leaders in the fiercely competitive hosting landscape.

Robust Security Measures

Security is paramount in the digital realm, and the best VPS hosting providers leave no stone unturned. Advanced security protocols, regular software updates, and proactive monitoring contribute to a secure hosting environment. This is particularly crucial for businesses handling sensitive data or running e-commerce platforms.

Exceptional Customer Support

A hallmark of top-tier VPS hosting is the provision of exceptional customer support. Prompt and knowledgeable assistance can make the difference between a minor hiccup and a significant downtime. Providers that prioritize customer satisfaction with 24/7 support ensure that clients can navigate any challenges swiftly and efficiently.

Performance Optimization

Optimizing server performance is a science, and the best VPS hosting providers have mastered it. From load balancing to efficient caching mechanisms, every aspect is fine-tuned to deliver blazing-fast website loading times. This not only enhances user experience but also contributes to improved search engine rankings.

Choosing the Best VPS Hosting

Performance Metrics: Evaluate providers based on their performance metrics, including uptime guarantees, server response times, and overall reliability.

Scalability Options: A hosting solution that can grow with your business is indispensable. Assess the scalability options offered by different providers.

Customer Reviews: Real-world experiences matter. Scrutinize customer reviews to gauge the satisfaction levels of existing users.

Cost vs. Value: While cost is a factor, the emphasis should be on value. A slightly higher investment for superior features and support can pay off in the long run.

Conclusion

VPS Hosting Services is the backbone of a robust online presence, and unlocking the secrets behind the best in the business is key to making an informed decision. Cutting-edge technology, robust security, exceptional customer support, and performance optimization are the cornerstones of top-tier VPS hosting providers.

In the competitive digital landscape, choosing the right VPS hosting provider is not just a business decision; it's a strategic move that can define your online success. As you navigate the options available, keep in mind the key considerations highlighted in this article to make an informed and impactful choice.

2 notes

·

View notes

Text

Decoding CISA Exploited Vulnerabilities

Integrating CISA Tools for Effective Vulnerability Management: Vulnerability management teams struggle to detect and update software with known vulnerabilities with over 20,000 CVEs reported annually. These teams must patch software across their firm to reduce risk and prevent a cybersecurity compromise, which is unachievable. Since it’s hard to patch all systems, most teams focus on fixing vulnerabilities that score high in the CVSS, a standardized and repeatable scoring methodology that rates reported vulnerabilities from most to least serious.

However, how do these organizations know to prioritize software with the highest CVE scores? It’s wonderful to talk to executives about the number or percentage of critical severity CVEs fixed, but does that teach us anything about their organization’s resilience? Does decreasing critical CVEs greatly reduce breach risk? In principle, the organization is lowering breach risk, but in fact, it’s hard to know.

To increase cybersecurity resilience, CISA identified exploited vulnerabilities

The Cybersecurity and Infrastructure Security Agency (CISA) Known Exploited Vulnerabilities (KEV) initiative was created to reduce breaches rather than theoretical risk. CISA strongly urges businesses to constantly evaluate and prioritize remediation of the Known Exploited Vulnerabilities catalog. By updating its list, CISA hopes to give a “authoritative source of vulnerabilities that have been exploited in the wild” and help firms mitigate risks to stay ahead of cyberattacks.

CISA has narrowed the list of CVEs security teams should remediate from tens-of-thousands to just over 1,000 by focusing on vulnerabilities that:

Been assigned a CVE ID and actively exploited in the wild

Have a clear fix, like a vendor update.

This limitation in scope allows overworked vulnerability management teams to extensively investigate software in their environment that has been reported to contain actively exploitable vulnerabilities, which are the most likely breach origins.

Rethinking vulnerability management to prioritize risk

With CISA KEV’s narrower list of vulnerabilities driving their workflows, security teams are spending less time patching software (a laborious and low-value task) and more time understanding their organization’s resiliency against these proven attack vectors. Many vulnerability management teams have replaced patching with testing to see if:

Software in their surroundings can exploit CISA KEV vulnerabilities.

Their compensatory controls identify and prevent breaches. This helps teams analyze the genuine risk to their organization and the value of their security protection investments.

This shift toward testing CISA KEV catalog vulnerabilities shows that organizations are maturing from traditional vulnerability management programs to Gartner-defined Continuous Threat Exposure Management (CTEM) programs that “surface and actively prioritize whatever most threatens your business.” This focus on proven risk instead of theoretical risk helps teams learn new skills and solutions to execute exploits across their enterprise.

ASM’s role in continuous vulnerability intelligence

An attack surface management (ASM) solution helps you understand cyber risk with continuous asset discovery and risk prioritization.

Continuous testing, a CTEM pillar, requires programs to “validate how attacks might work and how systems might react” to ensure security resources are focused on the most pressing risks. According to Gartner, “organizations that prioritize based on a continuous threat exposure management program will be three times less likely to suffer a breach.”

CTEM solutions strengthen cybersecurity defenses above typical vulnerability management programs by focusing on the most likely breaches. Stopping breaches is important since their average cost is rising. IBM’s Cost of a Data Breach research shows a 15% increase to USD 4.45 million over three years. As competent resources become scarcer and security budgets tighten, consider giving your teams a narrower emphasis, such as CISA KEV vulnerabilities, and equipping them with tools to test exploitability and assess cybersecurity defense robustness.

Checking exploitable vulnerabilities using IBM Security Randori

IBM Security Randori, an attack surface management solution, finds your external vulnerabilities from an adversarial perspective. It continuously validates an organization’s external attack surface and reports exploitable flaws.

A sophisticated ransomware attack hit Armellini Logistics in December 2019. After the attack, the company recovered fast and decided to be more proactive in prevention. Armellini uses Randori Recon to monitor external risk and update asset and vulnerability management systems as new cloud and SaaS applications launch. Armellini is increasingly leveraging Randori Recon’s target temptation analysis to prioritize vulnerabilities to repair. This understanding has helped the Armellini team lower company risk without affecting business operations.

In addition to managing vulnerabilities, the vulnerability validation feature checks the exploitability of CVEs like CVE-2023-7992, a zero-day vulnerability in Zyxel NAS systems found and reported by IBM X-Force Applied Research. This verification reduces noise and lets clients act on genuine threats and retest to see if mitigation or remediation worked.

Read more on Govindhtech.com

4 notes

·

View notes

Text

The Dynamic Role of Full Stack Developers in Modern Software Development

Introduction: In the rapidly evolving landscape of software development, full stack developers have emerged as indispensable assets, seamlessly bridging the gap between front-end and back-end development. Their versatility and expertise enable them to oversee the entire software development lifecycle, from conception to deployment. In this insightful exploration, we'll delve into the multifaceted responsibilities of full stack developers and uncover their pivotal role in crafting innovative and user-centric web applications.

Understanding the Versatility of Full Stack Developers:

Full stack developers serve as the linchpins of software development teams, blending their proficiency in front-end and back-end technologies to create cohesive and scalable solutions. Let's explore the diverse responsibilities that define their role:

End-to-End Development Mastery: At the core of full stack development lies the ability to navigate the entire software development lifecycle with finesse. Full stack developers possess a comprehensive understanding of both front-end and back-end technologies, empowering them to conceptualize, design, implement, and deploy web applications with efficiency and precision.

Front-End Expertise: On the front-end, full stack developers are entrusted with crafting engaging and intuitive user interfaces that captivate audiences. Leveraging their command of HTML, CSS, and JavaScript, they breathe life into designs, ensuring seamless navigation and an exceptional user experience across devices and platforms.

Back-End Proficiency: In the realm of back-end development, full stack developers focus on architecting the robust infrastructure that powers web applications. They leverage server-side languages and frameworks such as Node.js, Python, or Ruby on Rails to handle data storage, processing, and authentication, laying the groundwork for scalable and resilient applications.

Database Management Acumen: Full stack developers excel in database management, designing efficient schemas, optimizing queries, and safeguarding data integrity. Whether working with relational databases like MySQL or NoSQL databases like MongoDB, they implement storage solutions that align with the application's requirements and performance goals.

API Development Ingenuity: APIs serve as the conduits that facilitate seamless communication between different components of a web application. Full stack developers are adept at designing and implementing RESTful or GraphQL APIs, enabling frictionless data exchange between the front-end and back-end systems.

Testing and Quality Assurance Excellence: Quality assurance is paramount in software development, and full stack developers take on the responsibility of testing and debugging web applications. They devise and execute comprehensive testing strategies, identifying and resolving issues to ensure the application meets stringent performance and reliability standards.

Deployment and Maintenance Leadership: As the custodians of web applications, full stack developers oversee deployment to production environments and ongoing maintenance. They monitor performance metrics, address security vulnerabilities, and implement updates and enhancements to ensure the application remains robust, secure, and responsive to user needs.

Conclusion: In conclusion, full stack developers embody the essence of versatility and innovation in modern software development. Their ability to seamlessly navigate both front-end and back-end technologies enables them to craft sophisticated and user-centric web applications that drive business growth and enhance user experiences. As technology continues to evolve, full stack developers will remain at the forefront of digital innovation, shaping the future of software development with their ingenuity and expertise.

#full stack course#full stack developer#full stack software developer#full stack training#full stack web development

2 notes

·

View notes